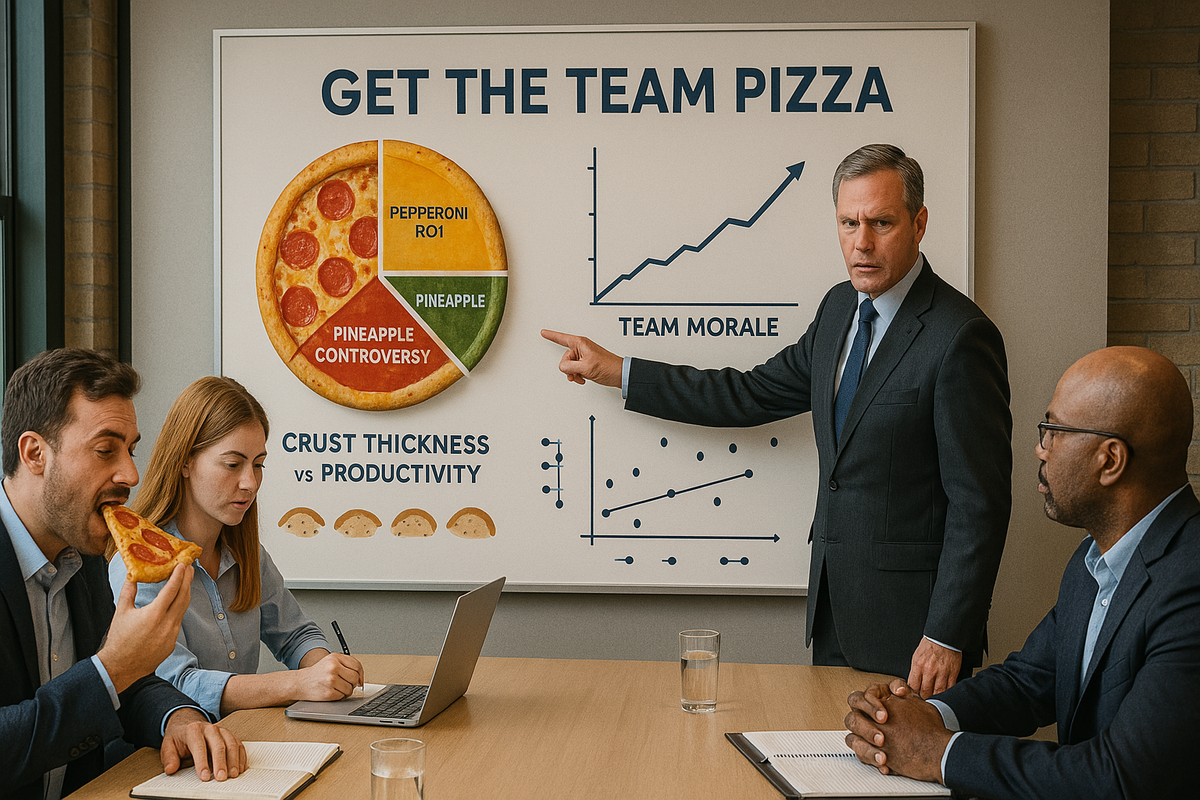

Get The Team Pizza

Gallup's State of the Global Workplace survey of two hundred thousand workers in one hundred and fifty countries said 85% were not engaged or actively disengaged with their work. Around the world, people are disengaging from work, and I want to understand why.

Disengagement is probably highest in part-time jobs. The kind you get for a summer between semesters at school. These jobs are necessarily short term and represent places that people stop on the way to some longer term employment. Obviously that is not true for all people, there are some « lifers » around, but they are the exception to the rule. I remember a manager I had at a job like this who, against all odds, inspired workers to work harder, produce more and feel better about it than some people who have already « found their calling ».

This manager would buy pizza for the entire team once a week. Every Friday has pizza day without fail. We were working at a big chain hotel - premium pricing, rich guests, and Friday was always the busiest night. The 600 rooms would all be sold so that meant that it was very busy, and there wasn’t much room to move people around if they weren’t satisfied with their rooms. I was on the front desk. there would be maybe 5 of us, about as many bell people to carry bags, fully staffed concierge and valet parking. It was the night when the most employees were working. It was both the most expensive night to buy pizza for everyone, and the least practical for us all to have time for a break to eat.

The manager would float around, making sure everything was working as expected. Somehow when you were just getting tired and becoming less effective, he would tap you on the shoulder and send you off for a slice. He made sure there was enough for everyone and used it to get people back to 100% if they were getting tired or frustrated with the unstoppable wave of guests that poured through the door from checkin time until midnight.

Some nights I didn’t eat a slice until the very end of my shift. Some nights I didn’t eat it at all, but it was nice to know it was there. It always arrived around 9 pm. After the peak, but just before everyone was completely burned out. And you knew it was coming. It kept you going. Somehow the hours from 8 to 10pm passed at double speed There was pizza in the headlights and we were speeding towards it.

What Pizza has Taught Me

At times when you need all hands on deck, everyone working at full steam just to get through and finish everything that is required, breaks seem like a luxury that wouldn’t make sense. But it worked. On Fridays at the hotel, everyone worked harder than other days. We felt less tired. We got more done per person than on a slow Tuesday. Why? The pizza signalled that this was going to be hard, and our bosses knew it. It said that they appreciated the effort. It let everyone know that despite the work required, we were all in it together. When you saw someone not leaving for a slice when the boxes arrived, you knew that we were all on the same page.

Later in my working life, I had a job as a teaching assistant. The big crazy nights for us were when we had to mark exams. Marking exams was going to be a long night. We had pizza. 🍕 Helping someone move? Pizza. Big collection of unpaid volunteers? Pizza. All of these events have two things in common

- People working harder than usual or expected.

- Everyone enjoying shared pizza.

When things are going to require extra effort, let the team know that you understand that. Make them feel appreciated and make sure that they are aligned. Pizza aligns people. Everybody loves pizza. It is a communal food. It’s about what it represents and what it signals to your team. It’s a carrot. It’s an incentive sure, but we weren’t working for the pizza on those busy nights. We were working for each other. Our glory of getting through another busy Friday was celebrated as a team. The pizza enabled us to look back and say « we fucking did it mate » another one in the books.